Writing a docker volume plugin to manage remote secrets

Table of Contents

I recently had to use a secret from AWS Secrets Manager in a docker container. My initial reflex was to pass in the secret as an environment variable. But this has a few issues. So I decided the next best thing is to mount the secret as a file:

awslocal secretsmanager get-secret-value --secret-id=test --query SecretString --output text > /var/run/user/$UID/mysecret

docker run -it -v /var/run/user/$UID/mysecret:/run/secrets/mysecret ubuntu cat /run/secrets/mysecret

That’s all well and good, but can we do better? What if we could just pass in the secret-id to docker, and let it fetch the secret from aws and mount it into the container for us.

Something like this:

docker run -it --volume-driver dsv -v mysecret:/run/secrets/mysecret ubuntu cat /run/secrets/mysecret

The advantages are slim (50% reduction in commands!), but they start stacking up if you’re managing secrets from multiple secrets managers (aws, gcp, azure etc.)

Turns out we can do this by writing our own Docker Volume Plugin. In this post we’ll walk through the process of creating a docker plugin from scratch.

The complete source code can be found here

1. How Docker Plugins Work

Before we get started, what are docker plugins exactly?

Docker Plugins have been around for ages, and they allow you to extend docker’s functionality by running an external process which communicates with the docker daemon.

The docker daemon exposes API’s to extend functionality for authorization, volumes, networks and IPAM (IP Address management).

For our purposes, we need to extend the volume functionality so that we can mount volumes containing secrets from a remote secrets manager.

Docker plugins are simple programs, and all they do is communicate with the docker daemon API using json data via a (unix or network) socket. This means they can be written in any language, but docker provides a convenient helper library in golang, so that’s what we’ll use.

2. Writing the plugin

The plugin helpers library allows us to create a docker volume plugin by implementing the following interface:

// Driver represents the interface a volume driver must fulfill.

type Driver interface {

Create(*CreateRequest) error

List() (*ListResponse, error)

Get(*GetRequest) (*GetResponse, error)

Remove(*RemoveRequest) error

Path(*PathRequest) (*PathResponse, error)

Mount(*MountRequest) (*MountResponse, error)

Unmount(*UnmountRequest) error

Capabilities() *CapabilitiesResponse

}

and running the following code to expose that interface to the docker daemon:

// Initialize a docker volume driver which implements the Driver interface

driver := volumes.DockerSecretsVolumeDriver{}

// Plugin starts and listens on a unix socket

handler := volume.NewHandler(&driver)

fmt.Printf("Listening on %s\n", SOCKET_ADDRESS)

handler.ServeUnix(SOCKET_ADDRESS, 0)

We want to make our solution as generic as possible - so that it can work with any remote secrets manager. As long as it’s able to retrieve secrets - we should be able to mount it as a (file) volume.

To enable this we’re going to create a new interface for a generic secrets backend that our volume plugin can call to retrieve secrets:

package secrets

// Stores all the details about a secret

type Secret struct {

Name string

Value string

Options map[string]string `json:"Opts,omitempty"`

}

// The type to request to store a new secret

type CreateSecret struct {

Name string

}

// The type to request a secret value

type GetSecret struct {

Name string

}

// The response type for requests to get a secret value

type GetSecretResponse struct {

Secret Secret

}

// This is the interface which all secretstore plugins must fulfil

type SecretStoreDriver interface {

Setup() error // Run any setup for the secrets backend

Create(*CreateSecret) error // Store a secret in the secrets backend

Get(*GetSecret) (*GetSecretResponse, error) // Get a secret value from the backend

}

As an example, I’ve created an AWSSecretsManagerDriver which implements the SecretStoreDriver interface here. This fetches and mounts secrets stored in AWS Secrets Manager

Now we can implement the interface for the Volume Driver to use this secrets backend. The volume driver creates an empty placeholder file when the user creates a volume, and only saves the secret to a file on disk when the user wishes to mount the secret into a container.

The implementation details are described in the comments below (actual code in the methods have been removed for clarity, but you can see the complete implementation here

package volumes

import (

"errors"

"fmt"

"os"

"path/filepath"

"rahoogan/docker-secrets-volume/secrets"

"github.com/containers/podman/v2/pkg/ctime"

"github.com/docker/go-plugins-helpers/volume"

"github.com/rs/zerolog/log"

)

const (

DRIVER_INSTALL_PATH string = "/docker/plugins/data" // The location where the plugin is installed

)

var (

DEFAULT_SECRETS_PATH string = filepath.Join(DRIVER_INSTALL_PATH, "secrets") // The location where the secrets are stored

)

type DockerSecretsVolumeDriver struct {

SecretBackend secrets.SecretStoreDriver

}

// This function registers a new secret with the volume driver.

// The secret must be uniquely identified by the Name in the secrets

// backend and must exist in the secrets backend, otherwise this request

// will fail. The secret is not stored on disk at this stage, that

// happens during mount.

func (driver *DockerSecretsVolumeDriver) Create(request *volume.CreateRequest) error {

...

}

// This function checks if the secret exists in the secret backend and

// that the Create function has been called to register the secret with

// the volume plugin. If so, it returns the source mountpoint where the

// secret will be mounted to.

func (driver *DockerSecretsVolumeDriver) Get(request *volume.GetRequest) (*volume.GetResponse, error) {

...

}

// This function lists all the secrets registered with the volume plugin

// It DOES NOT list all the secrets in the secrets backend

func (driver *DockerSecretsVolumeDriver) List() (*volume.ListResponse, error) {

...

}

// This function removes the secret registered with the volume plugin

// This DOES NOT remove the secret from the secrets backend

func (driver *DockerSecretsVolumeDriver) Remove(request *volume.RemoveRequest) error {

...

}

// This function provides the mountpoint where the secret is mounted

// (or will be mounted)

func (driver *DockerSecretsVolumeDriver) Path(request *volume.PathRequest) (*volume.PathResponse, error) {

...

}

// This function fetches the secret from the secrets backend and writes

// it to a file on disk so that it can be mounted into a container

func (driver *DockerSecretsVolumeDriver) Mount(request *volume.MountRequest) (*volume.MountResponse, error) {

...

}

// This function does not need to do anything.

// It could be improved by checking if there are any containers using

// the volume and trying to delete the mount file if not

func (driver *DockerSecretsVolumeDriver) Unmount(request *volume.UnmountRequest) error {

...

}

Finally, to run our plugin, we instruct the volume driver to use a specific secrets backend:

// Create and setup a SecretStore driver

secretsBackend := awssm.AWSSecretsManagerDriver{

RequestTimeout: 0,

SecretsPath: awssm.DEFAULT_DRIVER_SECRETS_PATH,

}

// Setup the secrets backend

secretsBackend.Setup()

// Initialize a docker volume driver with the secretstore backend

driver := volumes.DockerSecretsVolumeDriver{SecretBackend: &secretsBackend}

// Plugin starts and listens on a unix socket

handler := volume.NewHandler(&driver)

fmt.Printf("Listening on %s\n", PLUGIN_SOCKET_ADDRESS)

handler.ServeUnix(PLUGIN_SOCKET_ADDRESS, 0)

3. Choosing a plugin type

Once you’ve written the plugin - you have to build, install and run it. There are currently two methods of deploying docker plugins:

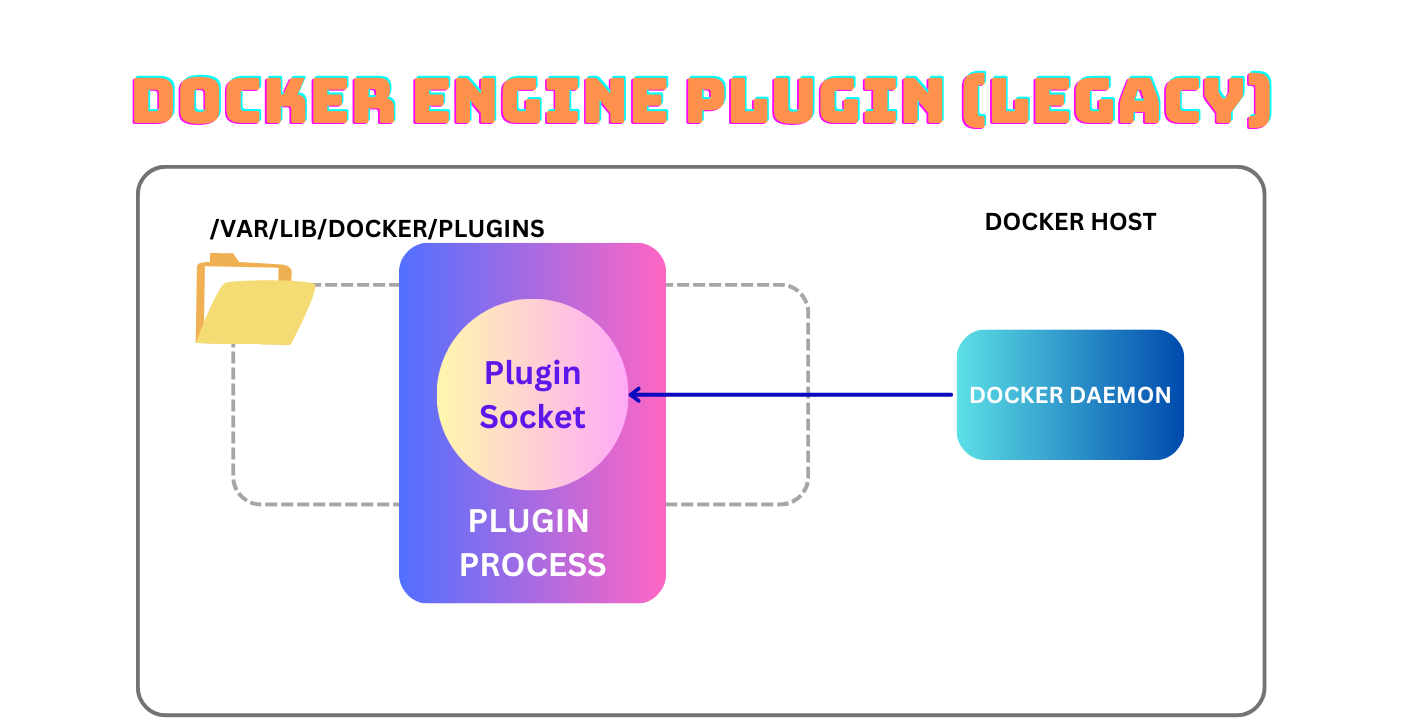

Method 1: Docker Engine Plugin System (Legacy) - v1

The legacy system (also known as unmanaged plugins or v1) require you to manage the plugin process yourself. This is a bit unwieldy, and you have to ensure that the plugin process is started before the docker daemon process - using something like systemd to manage the dependency.

The plugin runs alongside the docker daemon on the docker host and they comminicate with each other via the open plugin socket.

But how does the daemon know the plugin exists and should be used? It treats any socket in /var/lib/docker/plugins/<plugin_name> as a plugin - so all you have to do to register a plugin is to chuck a socket in that dir and make it respond to the right plugin API calls.

As the name implies, this system is legacy and probably shouldn’t be used by anyone writing a plugin today. However there are plenty of older plugins out there which still use this method (see here).

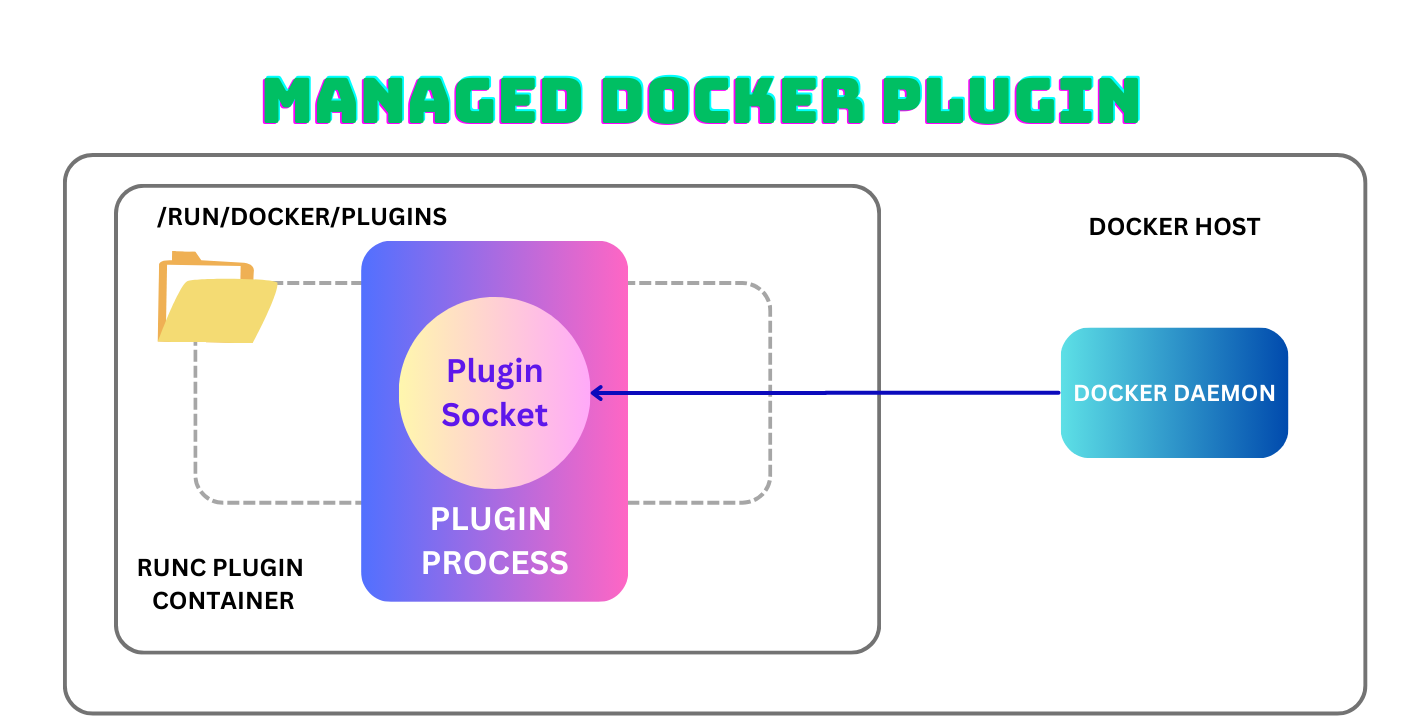

Method 2: Managed Docker Plugin System

The newer way of doing things is the managed plugin system. Plugins are packaged up into a root filesystem and a plugin definition file and stored in dockerhub.

Users can then install plugins using the docker plugin command, which downloads the contents of the package from dockerhub into a plugin directory managed by docker.

docker plugin install <dockerhub location>

After installing, you can enable a plugin:

docker plugin enable <plugin name>

When you enable a plugin, it runs the plugin image as a runc container using plugin’s rootfs and plugin definition (which is also the runc config file). This container is not visible to the regular docker daemon, and docker ps -a won’t bring it up since it uses runc outside of the dockerd process.

You can read more about managed plugins here.

4. Packaging our plugin

We’re going to use the managed plugin system to deploy our plugin.

First we need to create a docker image which can run our plugin:

FROM golang:1.22.4-alpine as builder

COPY . /go/src/github.com/rahoogan/docker-secrets-volume

WORKDIR /go/src/github.com/rahoogan/docker-secrets-volume

RUN set -ex \

&& apk add --no-cache --virtual .build-deps \

gcc libc-dev \

&& go install --ldflags '-extldflags "-static"' \

&& apk del .build-deps

CMD ["/go/bin/docker-secrets-volume"]

FROM alpine

RUN mkdir -p /run/docker/plugins /mnt/state /mnt/volumes

COPY --from=builder /go/bin/docker-secrets-volume .

CMD ["docker-secrets-volume"]

Then we build it, but rather than running it - we run docker create - which creates the container but doesn’t run it:

cd <path to the plugin code repo>

docker build -t rootfsimage .

id=$(docker create rootfsimage true)

Next we export the filesystem of the newly created container into a zip file:

cd /tmp/

# We're going to call our plugin "dsv"

sudo mkdir -p dsv/rootfs

# Extract container fs to a temporary directory on host

sudo docker export "$id" | sudo tar -x -C dsv/rootfs

# Cleanup

docker rm -vf "$id"

docker rmi rootfsimage

This will be the rootfs that runc uses to run our plugin in a container.

Next, we need to create a config definition for our plugin. This is essentially a runc configuration file which tells docker how to run the plugin container. I’ll explain the important parameters:

Env: defines the environment variables which can be set in the plugin container viadocker plugin set <plugin name> <ENV_NAME=ENV_VALUE>. Users of our plugin will have to set their AWS credentials in the plugin after they install and before they enable it.Socket: defines the name of the socket file which will be created in/run/docker/pluginswithin the plugin container. This will be used by the docker daemon to communicate with the plugin.Network: defines the network that the plugin container should use. We’re choosing to use thehostnetwork to allow the container to use local services (such as localstack for testing).PropagatedMount: specifies any paths within the container which will be persisted (via bind mounting) outside of the container. This is useful to ensure there is no data loss when upgrading plugin versions for example.

{

"Description": "A docker volume plugin to manage secrets from remote secrets managers",

"Documentation": "https://docs.docker.com/engine/extend/plugins/",

"Entrypoint": [

"/docker-secrets-volume"

],

"Env": [

{

"Description": "Enable debug logging",

"Name": "DEBUG",

"Settable": [

"value"

],

"Value": "0"

},

{

"Description": "The AWS access key id",

"Name": "AWS_ACCESS_KEY_ID",

"Settable": [

"value"

],

"Value": ""

},

{

"Description": "The AWS secret access key",

"Name": "AWS_SECRET_ACCESS_KEY",

"Settable": [

"value"

],

"Value": ""

},

{

"Description": "The AWS region to use",

"Name": "AWS_REGION",

"Settable": [

"value"

],

"Value": "us-east2"

},

{

"Description": "The AWS region to use",

"Name": "AWS_ENDPOINT_URL",

"Settable": [

"value"

],

"Value": ""

}

],

"Interface": {

"Socket": "dsv.sock",

"Types": [

"docker.volumedriver/1.0"

]

},

"Linux": {

"Capabilities": [

"CAP_SYS_ADMIN"

],

"AllowAllDevices": false,

"Devices": null

},

"Mounts": null,

"PropagatedMount": "/docker/plugins/data/secrets",

"Network": {

"Type": "host"

},

"User": {},

"Workdir": ""

}

Finally save this file to the same directory as the rootfs, and create the plugin. We’re going to really flex our creativity muscles and call our plugin dsv (short for “docker secrets volume”):

docker plugin create dsv /tmp/dsv

docker plugin enable dsv

docker plugin ls

Now we’re finally ready to use our plugin!

5. Running our plugin

Before we run our plugin, we need to set the environment variables for the remote secrets backend to work. I’m using localstack to run a local AWS secretsmanager instance, which I can point to by using the AWS_ENDPOINT_URL env var. Note that plugin environment variables can only be set when the plugin is disabled:

docker plugin disable dsv

docker plugin set dsv AWS_ACCESS_KEY_ID="<YOUR ACCESS KEY ID>"

docker plugin set dsv AWS_SECRET_ACCESS_KEY="<YOUR ACCESS KEY SECRET"

# Only set this if you're using localstack

docker plugin set dsv AWS_ENDPOINT_URL="http://172.17.0.2:4566"

docker plugin set dsv AWS_REGION="us-east-2"

docker plugin set dsv DEBUG=1

docker plugin enable dsv

And that’s it! We can now use the plugin:

# Create a secret in secrets manager

$ awslocal secretsmanager create-secret --name mysecret --secret-string "dontlookatme!"

# Mount the secret as a volume in a container

$ docker run --rm --volume-driver dsv -v mysecret:/run/secrets/hello ubuntu cat /run/secrets/hello

dontlookatme!

# Alternatively, you could also use the --mount option

$ docker run --rm --mount type=volume,volume-driver=dsv,src=mysecret,target=/run/secrets/mysecret ubuntu cat /run/secrets/mysecret

dontlookatme!

6. Gotchas

There are a few gotchas when creating docker volume plugins:

1. Make sure you use the PropagatedMount to store volumes.

The docker daemon needs the volume mountpoints to exist on the host for it to be able to mount them into containers.

If used as part of the v2 plugin architecture, mountpoints that are part of paths returned by the plugin must be mounted under the directory specified by PropagatedMount in the plugin configuration (Source)

2. Don’t mess with docker managed directories

Don’t be like me and think you’re doing something clever by mounting /run/docker/ or /var/lib/docker into the plugin container. Docker will mount the necessary directories and manage them. If you do it yourself you’ll only get in the way and break something (like overwriting the /run/docker/plugins directory where the plugin socket lives).

3. Make sure your plugin listens on the right socket

When using the managed plugin system (v2), docker creates and mounts the plugin socket to /run/docker/plugins/<plugin_name>.sock. Make sure your plugin process is listening on that socket:

// Plugin starts and listens on a unix socket

handler := volume.NewHandler(&driver)

fmt.Printf("Listening on %s\n", "/run/docker/plugins/dsv.sock")

handler.ServeUnix("/run/docker/plugins/dsv.sock", 0)

And of course, make sure the /run/docker/plugins/ exists on your rootfs :)

8. Someone Call Security

Ok great, we managed to shunt some secrets into a container. What are the security implications of this?

1. Don’t use this on a shared system

The secrets managed by the plugin are stored on a docker managed container. So anyone who can run docker commands can see your secrets.

Also, it’s trivial to just inspect the plugin to get the stored AWS credentials:

docker plugin inspect dsv -f "{{ .Settings.Env }}"

[DEBUG=1 AWS_ACCESS_KEY_ID=<YOUR_AWS_KEY> AWS_SECRET_ACCESS_KEY=<YOUR_AWS_SECRET> AWS_REGION=us-east-2 AWS_ENDPOINT_URL=http://172.17.0.2:4566]

So yeah, just make sure you use this on a development or local machine where only you have access.

2. Unrestricted mount locations within a container

The nice thing about docker secrets or secrets in docker-compose is that they mount the secret automatically into /run/secrets within the container. There is no option to mount the secret in any other location or specify the location at all. This is an in-memory filesystem which can be access restricted to specific users or groups which need access to secrets.

Our plugin can be used to mount the secret anywhere in the container, to potentially insecure locations where multiple in-container users can get access to it. This puts the onus on the user to manage permissions correctly.

9. Conclusion

So now that I’ve sufficiently scared you, where can we use this? I see this as another tool in the developer’s toolbox for local development. It’s potentially a little bit safer than leaving secrets in local files lying around. It also makes it a little bit easier to securely mount secrets from remote secrets managers.